Apple Vision Proは世界を変えるか?

関連調査レポート Biannual AR/VR Display Technology and Market Report (主筆アナリストのサポートWEB会議付き) の詳細仕様・販売価格・一部実データ付き商品サンプル・WEBご試読は こちらから お問い合わせください。

冒頭部和訳

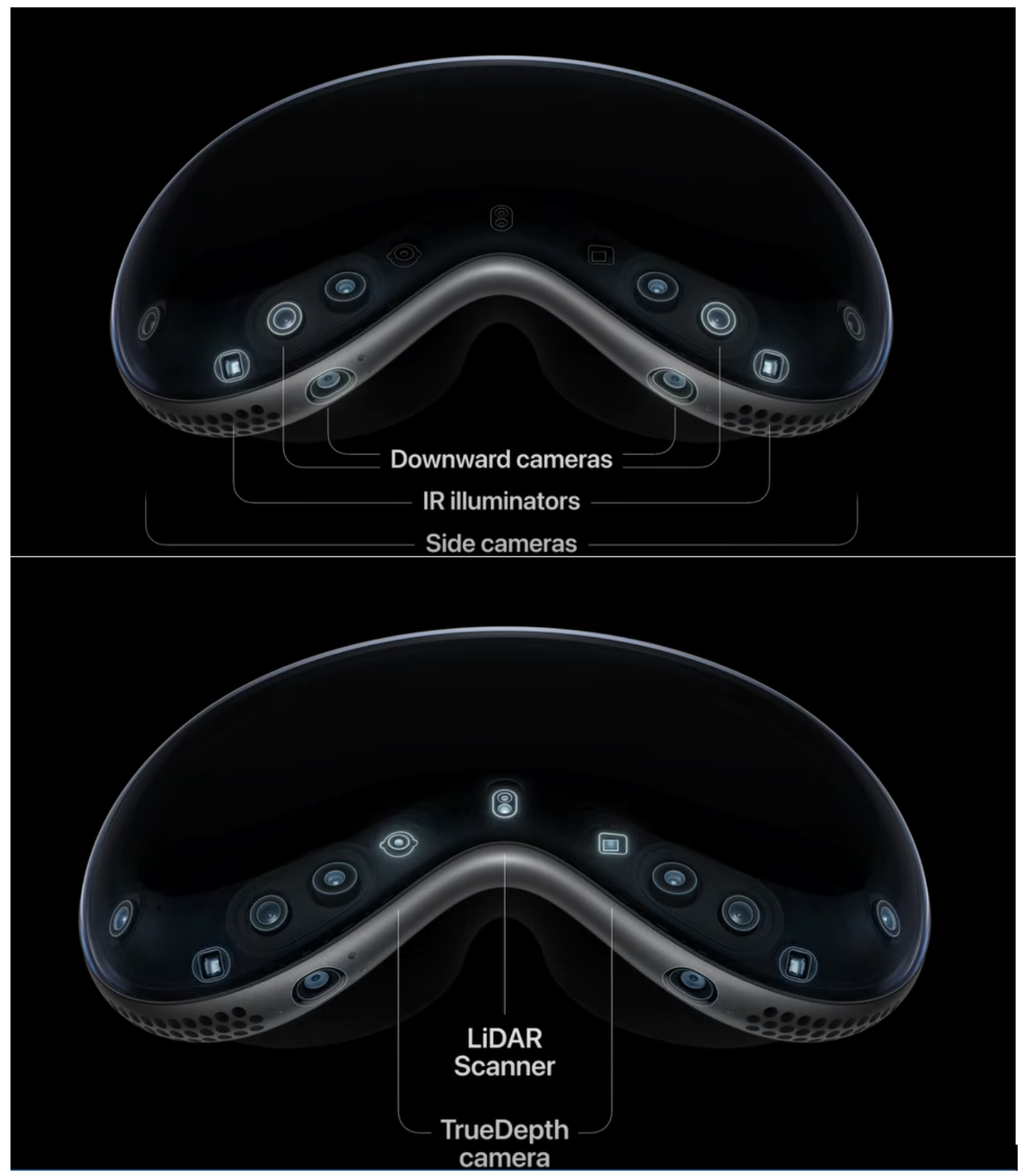

Appleがついに、今週開催のWWDCで初のAR/VRヘッドセットを発表した。Apple Vision Proは米国では「来年初め」に、桁外れの3499ドルで発売される予定だ。Appleは「歴史上最も進歩したパーソナル電子デバイス」であると言って価格は正当なものだとしているが、それは正しいかもしれない。Apple Vision Proは3つのディスプレイパネル、空間オーディオシステム、12のカメラ、5つのセンサー、6つのマイクを搭載しており、M2チップとセンサーアレイ用の新型R1チップを備えている。

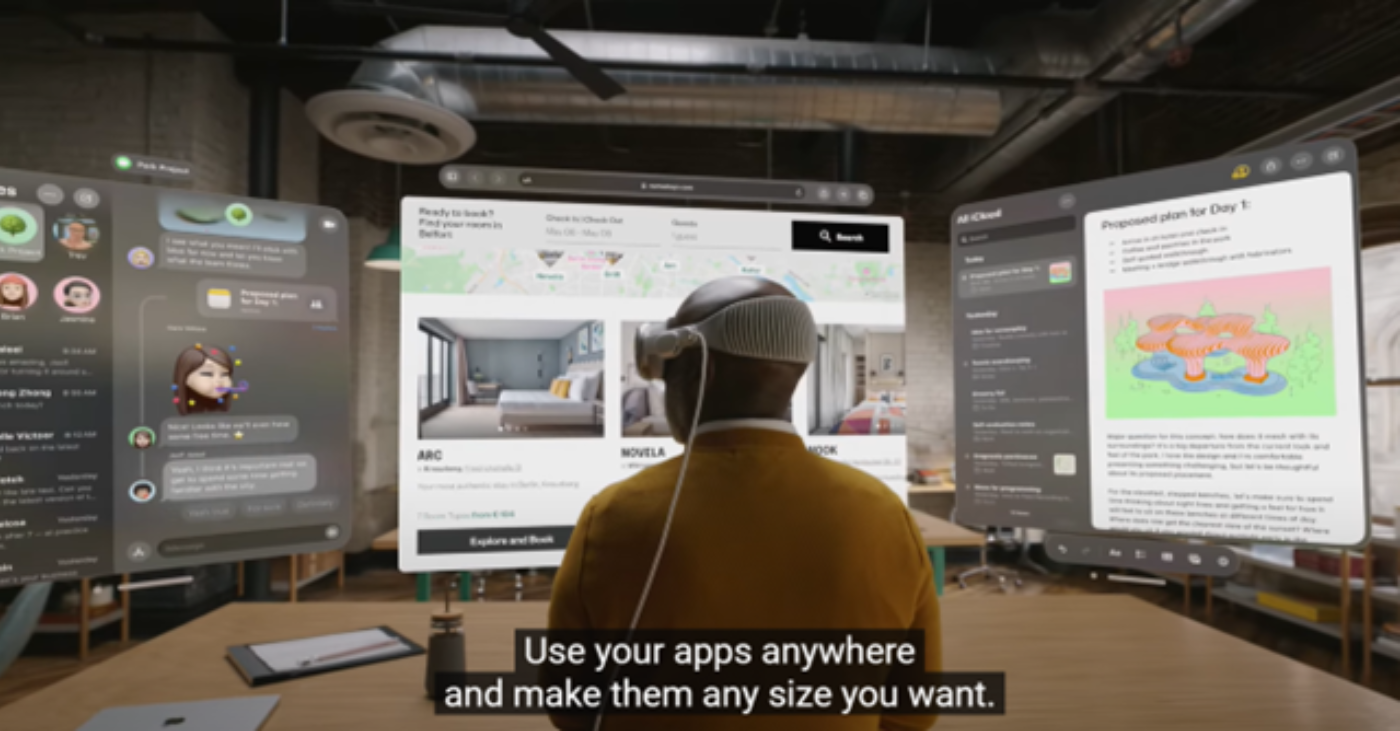

まず、このデバイスの名前について説明しよう。これは近い将来、もっと手頃な価格の、Pro版ではないデバイスが登場する可能性を示唆している。Appleも明確な声明を発表している。このヘッドセットのすべてはプレミアムで高解像度の視覚体験を得るためのものだ、と。発売前には、AppleはReality Proと名付けるのではないかという憶測があり、それはAR/VRヘッドセットとしては理にかなった見方だった。しかし、AppleはプレゼンテーションでVision Proについて、モニターやテレビ、さらには映画館のスクリーンなど、これまでのスクリーンをどのように置き換えられるか、という点を中心に説明した。Vision Proが有能なVRヘッドセットであることは間違いないが、メタバースはおろか、バーチャルワールドの探索についても言及されることはなかった。さらにAppleはVision ProをARデバイスとして紹介し、「Mixed Reality (複合現実)」という用語の使用を避けたのである。

これはFPD業界にパラダイムシフトをもたらすかもしれない。消費者が、1つのヘッドセットで複数の画面にアクセスできるようになるのである。さらに、Apple のヘッドセットの2つのメインディスプレイは OLED-on-Silicon技術 (Micro OLEDと呼ばれるケースが多い) に基づいているため、バックプレーンの製造はディスプレイ生産ラインではなく半導体ファウンドリで行われる。

DSCCは Biannual AR/VR Display Technology and Market Report の発刊時に、OLED-on-Siliconが他のFPD技術以上に急速に市場シェアを獲得し、最終的にはAR/VR市場を支配すると予測した。AppleはヘッドセットにOLED-on-Siliconを採用した最初の企業ではないが、同社のディスプレイはサイズが大きく、解像度も高い。

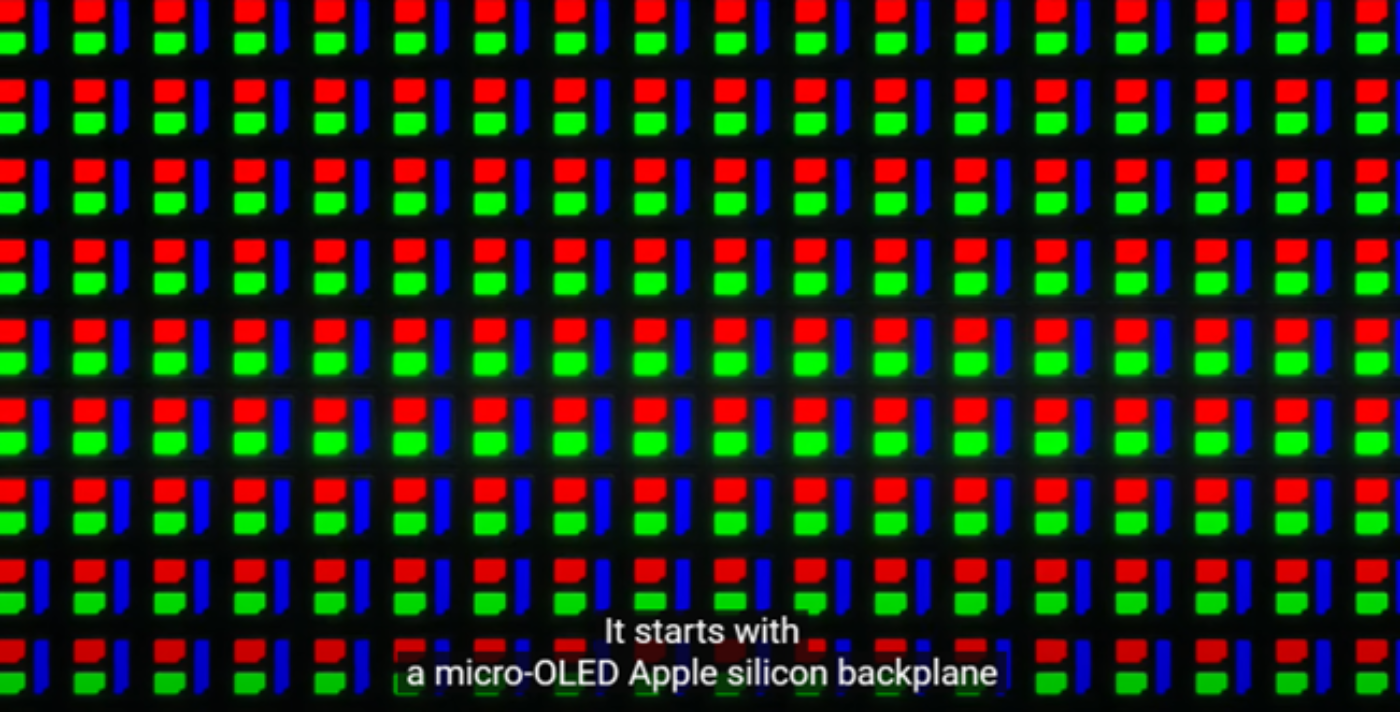

以前にレポートしたように、Appleは片目に4K解像度の1.4インチディスプレイを2枚使用している。Appleは基調演説でこのディスプレイについて「当社がこれまでに製造した中で最も先進的なディスプレイ」であると述べている。もちろん、Appleが自社でディスプレイを製造しているわけではない。シリコンバックプレーンはTSMC製、OLEDフロントプレーンはソニー製だ。Apple はディスプレイシステムが「Apple シリコンチップ上に」構築されていると述べているが、バックプレーンはAppleのM1およびM2チップに使用されているのと同じ5nmプロセスで製造されてはいない。実際にはAppleはコストを削減しバックプレーンの非常に低い歩留まりを補う目的で旧型プロセスノードを使用していると考えられる。

Appleによると、各ピクセルの幅は7.5 μmで、2つのパネルに合計2300万個のピクセルがある。これは片目あたり約3400PPI、約3800×3000の解像度に相当する。これに比べ、Bigscreen Beyondの解像度は片目あたり2560×2560である。

※ご参考※ 無料翻訳ツール (DeepL)

Apple finally unveiled its first AR/VR headset at WWDC this week. The Apple Vision Pro will be available in the US “early next year” for a whopping $3499. Apple justifies the price by saying it is the “most advanced personal electronics device ever” and they may be right. The Apple Vision Pro has three display panels, a spatial audio system, 12 cameras, five sensors, six microphones and is powered by the M2 chip along with a new R1 chip for the sensor arrays.

First, let’s talk about the name of the device. It suggests that there could be a more affordable non-Pro device in the near future. Apple is also clearly making a statement: this headset is all about getting a premium, high-definition visual experience. Before the launch, there was speculation that Apple could call it the Reality Pro, which would have made sense for an AR/VR headset. However, Apple’s presentation mostly focused on how the Vision Pro can replace existing screens such as monitors, TVs, or even cinema screens. While the Vision Pro is definitely a capable VR headset, there was no mention of exploring virtual worlds, let alone a Metaverse. Apple also introduced the Vision Pro as an AR device and stayed clear of the term “Mixed Reality”.

For the display industry, this is the potential paradigm shift: consumers can have access to multiple screens with a single headset. Moreover, the two primary displays in Apple’s headset are based on OLED-on-Silicon technology (often labelled as Micro OLED), so the backplanes are made in semiconductor foundries instead of display fabs.

When we released our Biannual AR/VR Display Technology and Market Report, we predicted that OLED-on-Silicon would quickly gain market share over other display technologies and eventually dominate the AR/VR market. Apple is not the first company to adopt OLED-on-Silicon for a headset, but their displays are larger and with a higher resolution.

As we previously reported, Apple uses a pair of 1.4” displays with a 4K resolution per eye. During the keynote, Apple said they were the “most advanced displays we’ve ever made”. Of course, Apple does not manufacture the displays itself. The silicon backplane is made by TSMC, while the OLED frontplane is from Sony. Although Apple states the display system is built “on top of an Apple silicon chip,” the backplane is not manufactured with the same 5nm process that has been used for Apple’s M1 and M2 chips. In fact, we believe that Apple is using a legacy process node to reduce the cost and compensate for the very low yield of the backplane.

According to Apple, each pixel is 7.5 μm wide and there are 23M pixels across the two panels. That would correspond to roughly 3400 PPI and a resolution of approximately 3800 × 3000 per eye. For comparison, the Bigscreen Beyond has a resolution of 2560 × 2560 per eye.

Another reason for the high brightness is that the optics will lose a large amount of light. Apple says they use a custom three-element lens but does not give any indication of how efficient it is. Most pancake lenses designed for VR have an efficiency of 25% or lower. At the AR/VR Display Forum, eMagin discussed this issue and said that a brightness of 10,000 nits or above was required to compensate for the short duty cycle and the low optical efficiency.

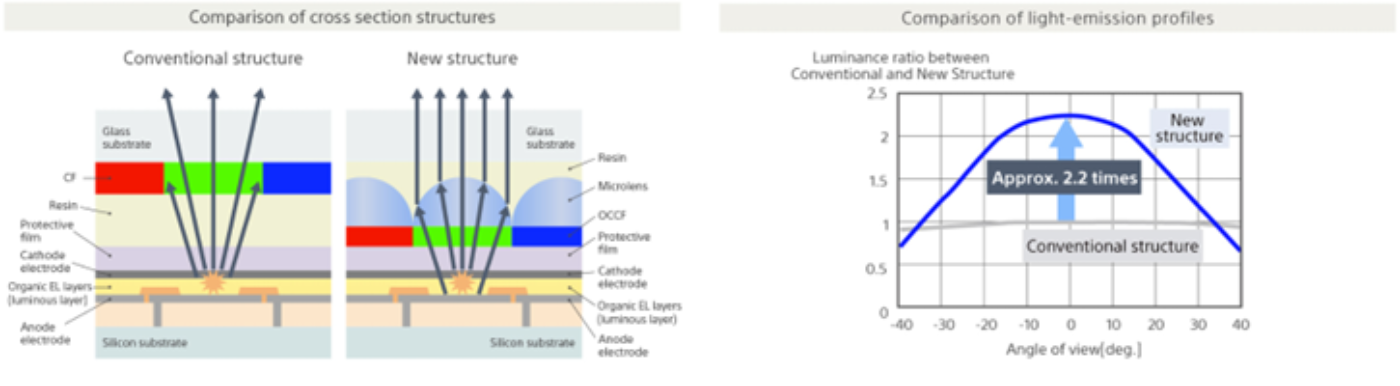

Sony’s technology is based on a white OLED stack and a color filter array. In a paper presented at Display Week 2019, Sony said it had developed a microlens array that changes the emission profile and significantly increases luminance when viewed at normal angle. At the time, Sony said this could boost luminance by a factor of 1.8. Sony has also moved the color filters closer to the OLED stack to improve efficiency. According to its website, Sony’s current structure can now increase luminance by a factor of 2.2, compared to the old structure without microlenses. In the case of a VR headset, there is no need for an anti-reflective component, such as a polarizer, since there is no ambient light.

Apple’s user interface is based on eye tracking, hand gestures and voice commands. There is no need for controllers. For eye tracking, Apple has integrated IR cameras and LEDs around the lenses. These also enable Optic ID, a new biometric identification system based on the uniqueness of a person’s iris. Just like with Face ID, data is encrypted, never leaves the device and is only available to the Secure Enclave Processor.

The Apple Vision Pro sits very close to the face, so there is no room for prescription glasses. Apple has partnered with Zeiss to create custom optical inserts that magnetically attach to the eyepieces. While this solution will probably provide excellent optical clarity, there is no indication of how much it will cost. People who wear contact lenses should be able to use the headset directly.

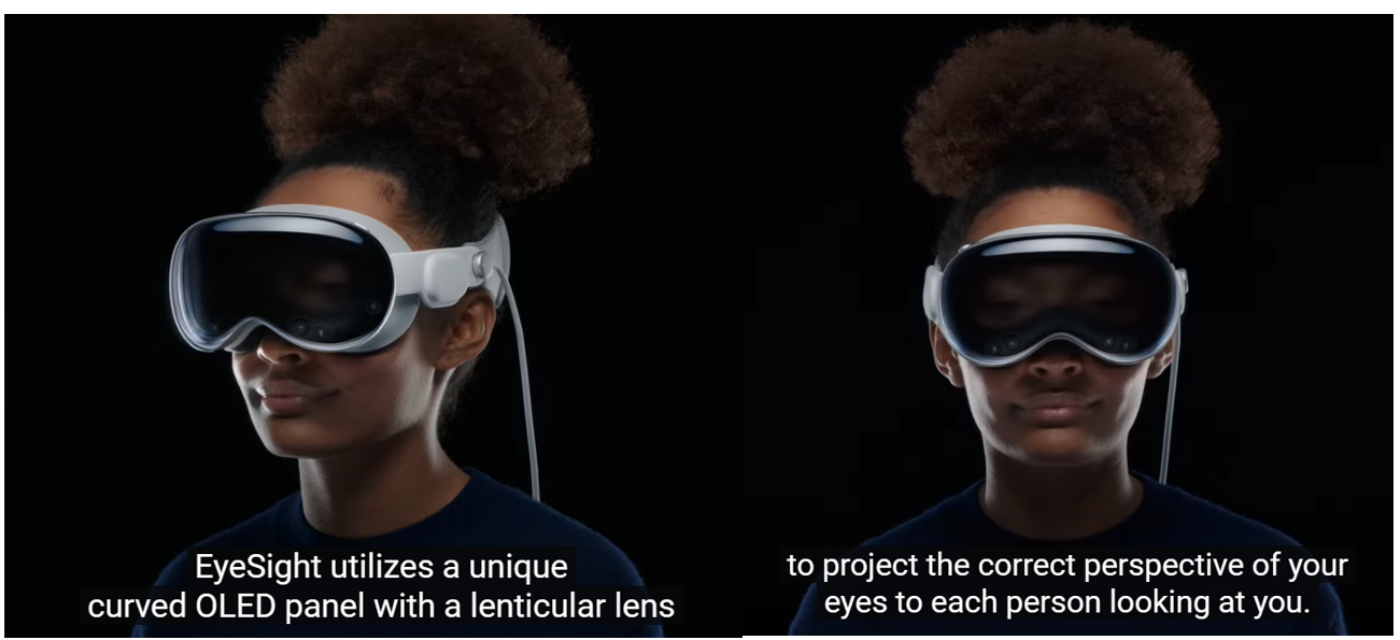

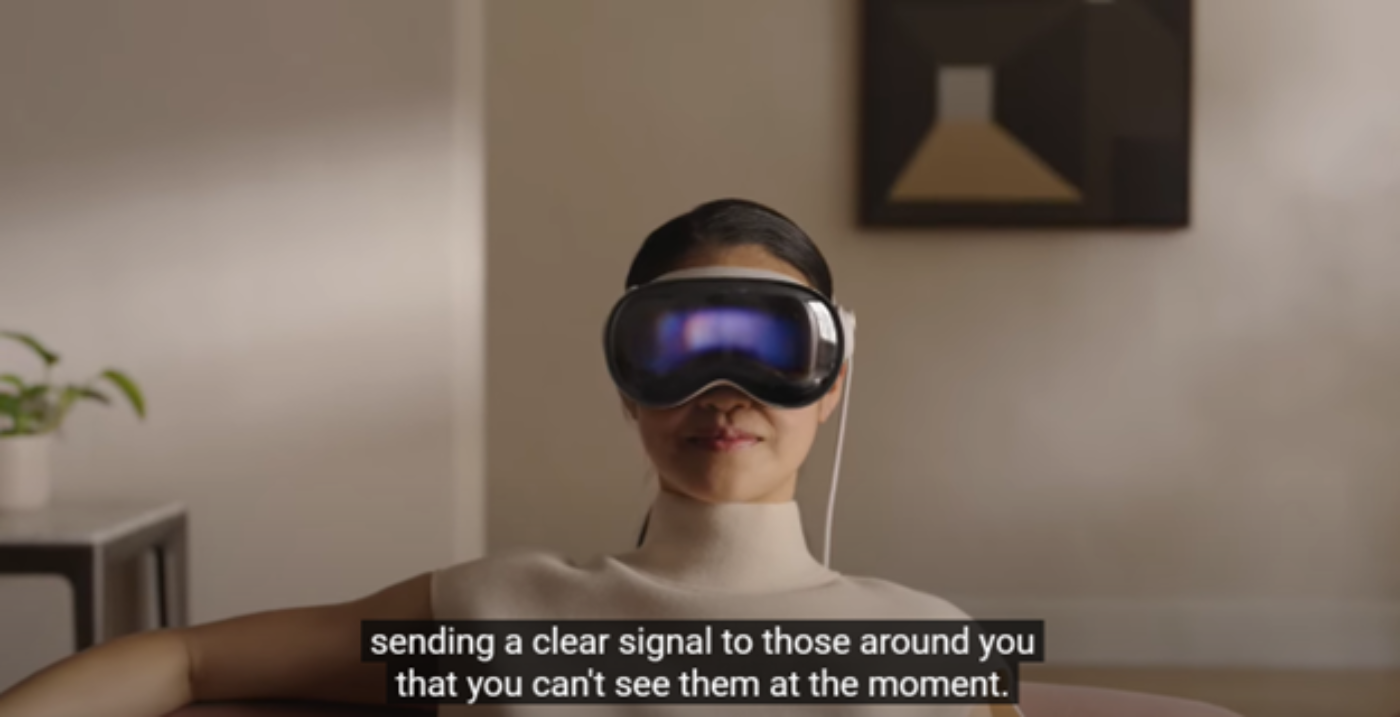

The third display is a flexible OLED panel on the front of the headset. Supplied by LG Display, the panel has a lenticular lens to project different views of your eyes so that each person looking at you will have the correct perspective. Apple calls it EyeSight. This is probably the most surprising feature, since no other headset on the market currently offers reverse passthrough. Apple does not disclose the resolution of the panel, but each additional view will reduce the effective resolution. According to Apple, the result is a 3D display that helps users interact with other people around them:

“When a person approaches someone wearing Vision Pro, the device feels transparent — letting the user see them while also displaying the user’s eyes. When a user is immersed in an environment or using an app, EyeSight gives visual cues to others about what the user is focused on.”

Final thoughts

There is no doubt that Apple has made a powerful headset that combines an innovative user interface with high resolution visuals. However, the components are expensive. The displays alone are expected to cost over $350, or 10% of the retail price. It is not clear how Apple will be able to release a more affordable headset without making significant compromises.

The headset supports 3D (stereoscopic) contents and features Apple’s first 3D camera. We can expect more Apple products to have a 3D camera in the future. However, Apple has not explained how it will deal with issues like vergence-accommodation conflict (VAC). Most of the examples shown during the keynote presentation were based on 2D screens floating in the field of view.

Battery life is another issue. The external battery pack is easy to fit inside a pocket but only provides about 2 hours of use. I suspect Apple made a conscious decision not to provide a bigger battery, to prevent users to wear the headset for too long.

Apple sees the Vision Pro as a new type of computing device (spatial computing), as opposed to a gaming console. Apple barely mentioned gaming during the keynote, but one sentence caught my attention: “This is just the start of how gaming will evolve on Vision Pro”. By offering support for Unity-based games, it is clear that Apple wants developers to start building VR games for the Vision Pro.

How does the launch of the Vision Pro impact our forecast for AR/VR? We already knew production would not start until later this year and that there was a risk shipping would be delayed until next year. Now we know shipments will only begin in 2024. The price of the headset also confirms that volumes will be low. However, Apple’s announcement is generally good news for Meta and other manufacturers offering cheaper headsets (Meta’s Quest 3 will start at $500). Not only is Apple positioned in a different pricing tier, but the Vision Pro will likely stimulate interest in AR/VR amongst the mainstream population. If this is the future of computing, why not start learning now? We will probably upgrade the forecast when we update our report.